Boost your simulation performance with GPU

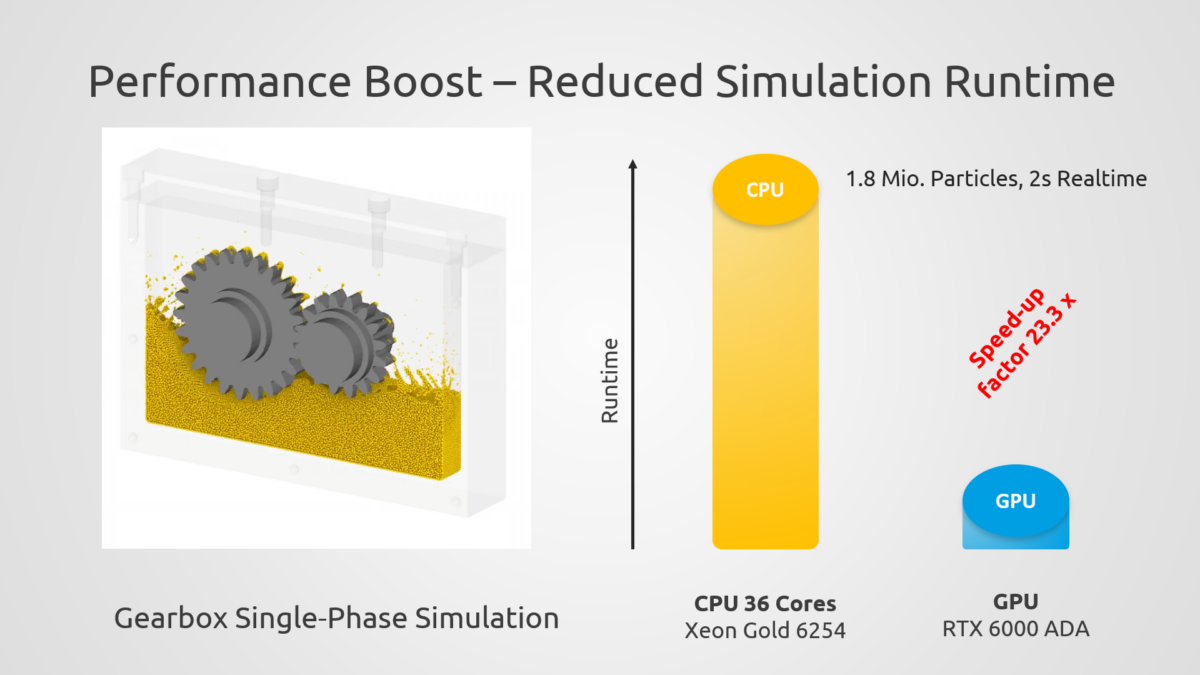

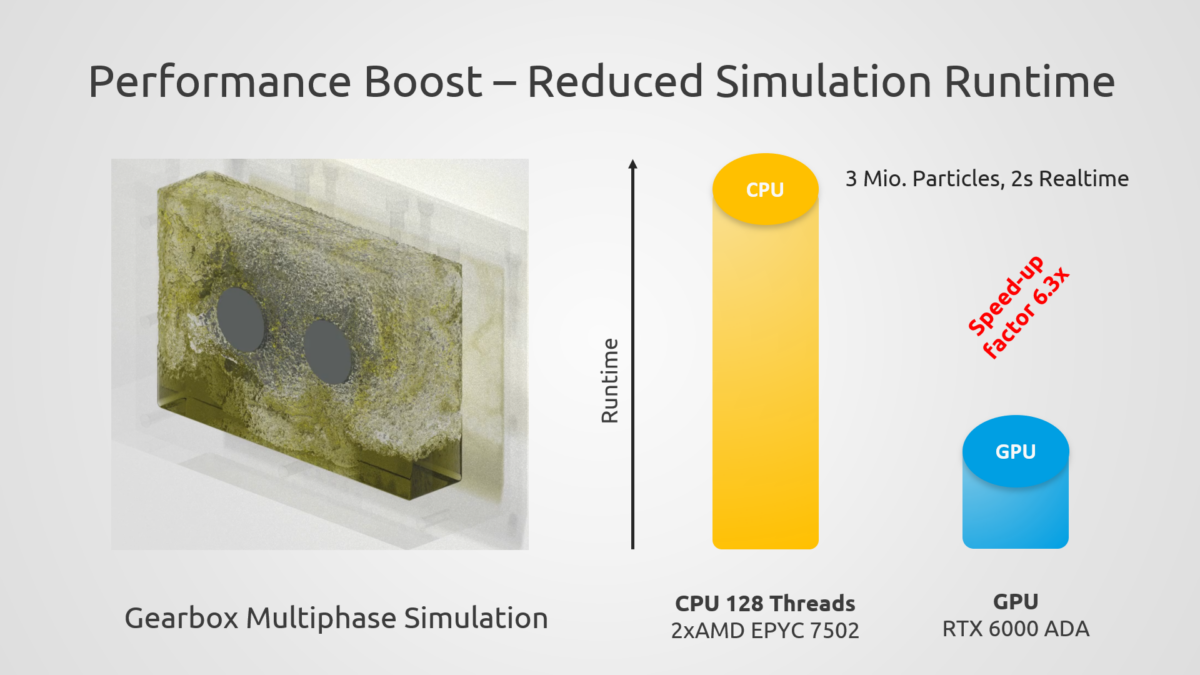

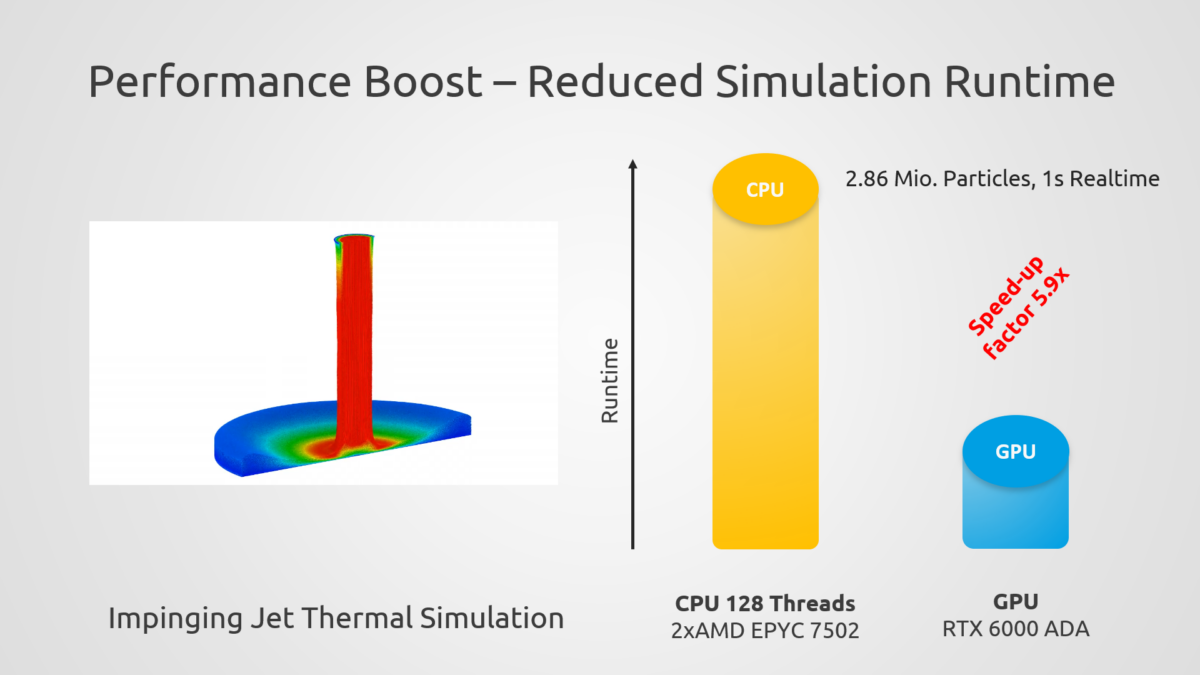

“Time is money”. In the world of simulation this means being able to perform reliable simulations, faster.

Throughout its development, PreonLab has already introduced game-changing approaches, like its unique implicit IISPH formulation (learn more about it here) and advanced adaptive refinement and coarsening with Continuous Particle Size (read about it here) for efficient simulation on CPU hardware.

Over the past decade, the development of more advanced GPUs has gathered up great speed and it is likely that this trend will continue in the future. Behind the scenes, we too have been experimenting with GPUs for some time now, to fully leverage their potential for PreonLab.

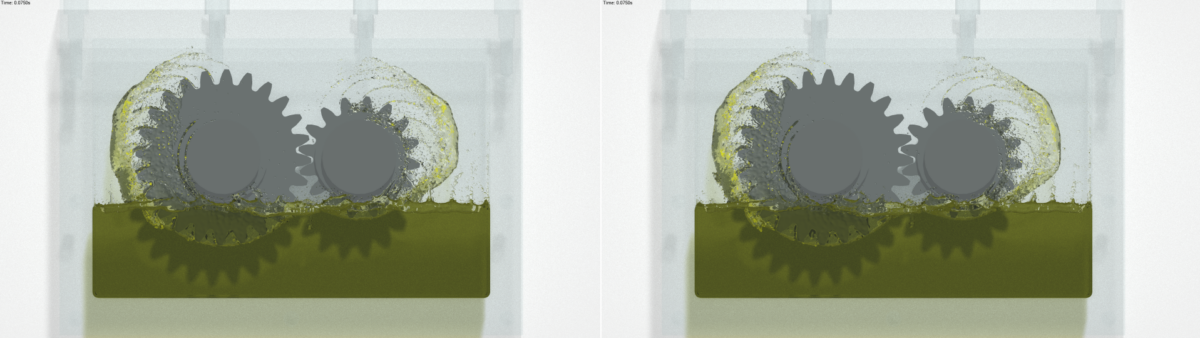

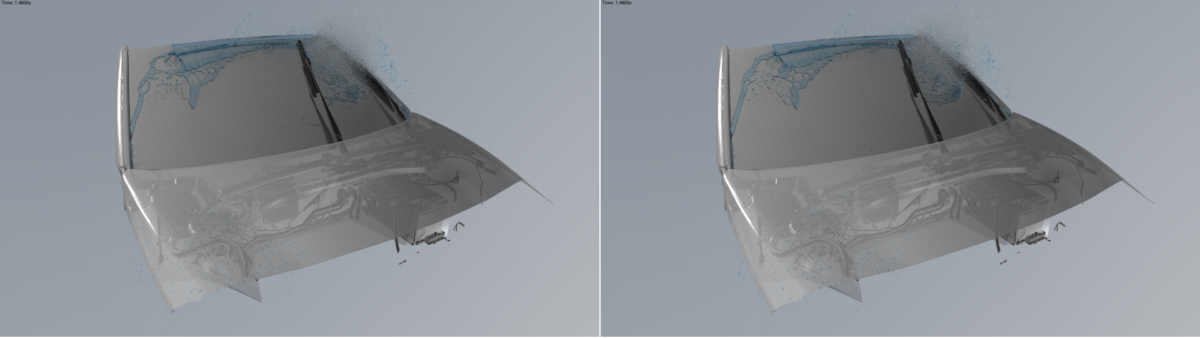

Now, PreonLab’s code has been ported to run on GPUs making use of Nvidia’s proprietary CUDA API to push the speed-barrier for particle-based simulation even further.